RAG, or Retrieval-Augmented Generation, is transforming the landscape of AI cognition by enhancing how models access and utilize information. As you explore this innovative approach, you’ll discover how combining retrieval and generation not only improves the accuracy of AI responses but also expands its ability to comprehend complex contexts. This evolution empowers you to enhance decision-making and streamline information processing, paving the way for a new era in intelligent systems. Understanding RAG is important for leveraging AI’s full potential and staying ahead in today’s fast-paced digital world.

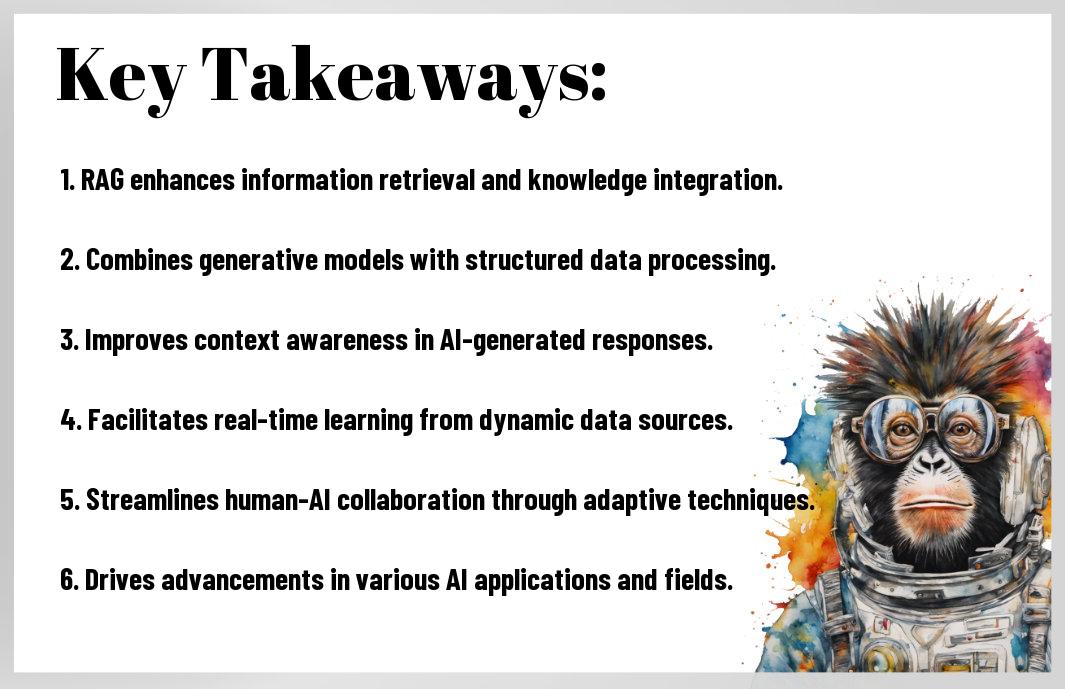

Key Takeaways:

- Enhanced Knowledge Retrieval: RAG combines generation and retrieval, allowing AI to access vast external knowledge sources, improving the accuracy and relevance of information.

- Dynamic Response Generation: By integrating retrieval mechanisms, RAG enables AI models to provide contextually rich and adaptive responses tailored to user queries.

- Reduced Hallucinations: RAG minimizes the common AI issue of generating inaccurate information by grounding responses in verified data sources.

- Scalability: This model can handle larger datasets effectively, accommodating growing information needs without compromising performance.

- Task Flexibility: RAG can pivot seamlessly between various tasks, enhancing its utility across different applications and industries.

- User Interaction: With its responsive nature, RAG fosters more engaging and intuitive interactions, improving user experience in AI applications.

- Improved Training Efficiency: By leveraging existing data rather than only internal data, RAG reduces the need for extensive retraining of models, streamlining the development process.

Understanding RAG: Definition and Mechanism

While traditional AI models rely predominantly on static knowledge bases and pre-defined algorithms to generate responses and insights, RAG (Retrieval-Augmented Generation) presents a remarkable shift in cognitive processing by merging retrieval capabilities with generative tasks. RAG introduces a unique approach that enables AI systems to access extensive databases in real-time, enhancing their context awareness and response quality. This structure allows you, as a user, to interact with AI that adapts its generation based on up-to-date information, making the model not only more informed but also incredibly versatile in handling diverse queries.

At the core of RAG lies a sophisticated mechanism that integrates two fundamental components: a retriever and a generator. The retriever efficiently searches and fetches relevant information from a vast pool of documents or databases, ensuring that the AI is equipped with real-world knowledge. Once the retriever has gathered pertinent data, the generator takes over, utilizing this information to construct coherent and contextually relevant responses. This bifurcated structure empowers RAG to generate answers that are not just algorithmically sound but are also rich in detail and relevance. You can appreciate how this integrated process enhances the overall interaction you have with AI, providing a more enriching experience.

The synergy between retrieval and generation capabilities illustrates RAG’s innovative approach to processing information. By facilitating a dynamic interaction between the retriever and generator, RAG ensures that your queries are met with low latency and high accuracy. The continuous feedback loop in this mechanism enables the AI to update its responses based on the latest data available, pushing beyond the limitations of traditional static models. With RAG, you’re not merely accessing predetermined knowledge; instead, you’re engaging with a system that learns and evolves, enriching your overall understanding and exploration of various subjects.

Components of RAG

Understanding the primary components of RAG can significantly enhance your appreciation of its capabilities. The two principal elements—you have the retriever and the generator—play distinct yet complementary roles in the RAG architecture. The retriever functions like a sophisticated search engine. It comprehensively scans through vast repositories of information, identifying and extracting relevant documents that pertain to your query. By implementing advanced algorithms and indexing techniques, the retriever optimizes its search outputs, ensuring that the information supplied to the generator is both timely and relevant. Consequently, you can expect a level of adaptability in responses that is distinctly superior to conventional models.

The generator in RAG is where creativity and conversational intelligence come into play. Utilizing the information retrieved, the generator synthesizes answers that are coherent and contextually aligned with your specific needs. This component of RAG is designed to incorporate natural language processing techniques that ensure fluid and logical communication. For you as a user, this means that the interaction with AI feels more like a conversation where your input shapes the system’s output, leading to responses that feel personalized rather than generic. The fluid exchange of information showcases the strength of RAG in facilitating more meaningful dialogue.

Together, the retriever and generator components encapsulate RAG’s capability to revolutionize AI cognition. This dual-structured design cultivates a comprehensive decision-making process that leverages wide-ranging datasets, which translates into responses that are not only relevant but also enriched with details you may find vital. Understanding these components equips you to utilize RAG more effectively, ensuring that your interactions yield insightful, accurate, and timely information.

How RAG Differs from Traditional AI Models

Against the backdrop of traditional AI models, RAG distinguishes itself by its inherent flexibility and robustness in information processing. Traditional AI typically operates within limitations, relying exclusively on pre-existing datasets, leading to potential stagnation in responses over time. In contrast, RAG’s ability to access real-time data directly impacts the depth and relevance of the outcomes. While traditional models may deliver knowledgeable answers, their responses often lack the dynamism and adaptability that emerge from RAG’s combination of retrieval and generation. This variance ensures that you gain richer, more current insights when interacting with RAG.

Mechanism-wise, RAG stands out because it actively utilizes a continuous feedback loop which improves the response quality based on new incoming data. Traditional AI models, on the other hand, often struggle with outdated knowledge, leading to possible inaccuracies in their output. The seamless access to updated information is one of RAG’s most significant advantages, making it an necessary tool for tasks requiring up-to-the-minute accuracy. This adaptation means that you can rely on AI to provide not just more precise answers but to also enrich your understanding of evolving topics.

The Impact of RAG on AI Knowledge Retrieval

There’s a significant transformation happening in the way artificial intelligence systems retrieve and utilize knowledge, largely thanks to the introduction of Retrieval-Augmented Generation (RAG). By combining traditional retrieval methods with generative capabilities, RAG elevates the performance of AI systems in several critical ways. You will find that RAG enables these systems to access an extensive pool of knowledge and information, which can be dynamically incorporated into responses. This paradigm shift allows AI to not only generate text but also to ground it in factual information drawn from a wide array of sources, enhancing the overall quality of its output.

Enhancing Contextual Awareness

Impact on your interaction with AI becomes more apparent when you consider how RAG enhances contextual awareness. With this approach, the AI can better understand the nuances of your query by pulling in relevant data from its extensive database. When you ask a question, the AI doesn’t merely regurgitate pre-programmed responses; instead, it actively retrieves contextually relevant information to craft a more meaningful and tailored answer. This level of contextual adaptation makes the experience more engaging and informative, as you’re likely to receive responses that go beyond surface-level understanding.

Furthermore, RAG fosters a more natural conversational flow. When you engage with an AI system utilizing this technology, you should feel more like you’re having a dialogue rather than interacting with a machine. The AI’s improved contextual awareness allows it to track the progression of conversation topics seamlessly, pulling in information that is pertinent to your line of questioning. This not only makes the experience smoother but also helps you investigate deeper into your inquiries with the assurance that the AI will provide answers rooted in current, comprehensive data.

Improving Accuracy and Relevance

Impact of RAG on the accuracy and relevance of the information you receive is profound. By enabling the AI to retrieve data from a constantly updated knowledge base, your interactions can be substantially more precise. No longer are you stuck with outdated or generic responses. Instead, RAG allows the AI system to access real-time information, enhancing its reliability and making your decision-making process more informed. This accuracy is especially important in critical areas where decisions must be made based on the most reliable data available, allowing you to trust the information provided when it matters most.

Plus, the integration of RAG creates a feedback loop where the AI continuously improves its ability to discern relevant information based on user interactions. As you engage with the system, it learns what types of answers resonate with you the most, optimizing future responses. This ensures not only that answers are accurate but also that they are tailored to meet your specific needs and interests. With this ongoing refinement, you can rest assured that your AI experience will evolve to become increasingly aligned with your expectations, ultimately enhancing the effectiveness of your knowledge retrieval process.

RAG’s Role in Multimodal AI Applications

Despite the rapid advancements in artificial intelligence, the integration of various modality inputs has become a focal point for innovation and efficiency. RAG, or Retrieval-Augmented Generation, is emerging as a transformative force in achieving seamless multimodal AI applications. It importantly empowers systems to pull from extensive databases while generating contextually relevant responses, significantly enhancing the understanding of both textual and visual data. As articulated in A New Paradigm in Generative AI Applications: Navigating …, RAG’s ability to synthesize information from diverse sources stands at the forefront of revolutionizing how AI perceives and interacts with the world around it.

Integration with Visual and Textual Data

At the heart of RAG’s functionality lies its exceptional prowess in integrating visual and textual data streams. This hybrid approach allows systems to analyze and merge disparate inputs, creating a unified framework that provides richer information context. For instance, if you were to input a query about a specific product while simultaneously sharing an image of that product, RAG can sift through both the visual and textual data to create a highly accurate, comprehensive response. This capability effectively breaks down barriers in information retrieval and comprehension, enabling you to experience a more natural interaction with AI.

Moreover, the integration of visual and textual data propels breakthroughs in various AI applications, ranging from customer service to creative industries. Businesses can deploy RAG-powered systems that not only respond to inquiries with text-based answers but can also reference associated images, enhancing user engagement and satisfaction. In scenarios such as online shopping, you can describe a product, and the AI responds with both textual information and relevant images, providing a holistic view that elevates your shopping experience. This synergy between modalities ensures that you can access a wealth of information in a more intuitive manner.

Case Studies in Industry

By examining real-world case studies, you can appreciate the significant impact of RAG across various sectors. Companies that have implemented RAG technology are witnessing accelerated improvement in their operations, ranging from enhanced customer engagement to increased productivity. Here are some notable examples:

- Retail: A leading e-commerce platform utilized RAG to integrate customer reviews and product images, resulting in a 30% increase in conversion rates.

- Healthcare: A medical AI firm deployed RAG to analyze both patient history and diagnostic images, achieving a 20% reduction in misdiagnosis.

- Marketing: A digital advertising agency integrated RAG for crafting personalized ad experiences, leading to a 50% boost in user engagement metrics within a single campaign.

- Gaming: An interactive gaming company applied RAG technology to personalize player experiences, seeing a 40% rise in user retention over three months.

Understanding the transformation that RAG brings to various industries is important for realizing its full potential. By harnessing the capabilities of RAG, your organization can drive innovation by combining data-rich images with personalized responses. This method not only enhances user satisfaction but also optimizes operational efficiencies, allowing you to react quickly to market changes and consumer needs. The integration of visual and textual data fundamentally reshapes user interaction, creating an enriched experience that blends creativity with practicality.

Addressing Challenges and Limitations of RAG

Many professionals and enthusiasts are excited about the potential of Retrieval-Augmented Generation (RAG) in reshaping AI cognition. However, it’s important to probe into the challenges and limitations that accompany its implementation. As with any innovative technology, RAG is not without its hurdles, which require addressing to fully harness its capabilities. Understanding these challenges is vital for your effective application of RAG in AI systems, ensuring you are prepared for the complexities that may arise. For an in-depth exploration of RAG’s impact, you can read more about Retrieval-Augmented Generation (RAG) in AI: A Quantum Leap for the Industry.

Data Dependency Issues

Above all, the effectiveness of RAG relies heavily on the quality and diversity of the data it retrieves. If your retrieval system is limited to a narrow set of data sources, the outputs generated through RAG will be similarly constricted. This dependency creates a scenario where the richness of the generated text is directly proportional to the variety of data available. Ensuring access to comprehensive and high-quality datasets is imperative; if you’re not cautious, you may find your applications lacking the depth and relevance that users expect. The potential for biased or incomplete information also poses a significant risk, potentially leading to the dissemination of misinformation.

Furthermore, the contextual nuances of the data play a critical role in how RAG performs. You will need to ensure that the data not only encompasses a wide range of topics but also maintains contextual relevance. This requires a diligent curation process where data must be continually updated to reflect ongoing changes in knowledge and cultural relevance. Ignoring this aspect could result in your AI systems producing outputs that are outdated or contextually inappropriate. Therefore, while RAG offers vast potential, its reliance on data highlighted the necessity for ongoing investment in data management strategies to mitigate these issues.

Moreover, the integration of diverse datasets may prompt concerns regarding data privacy and intellectual property. You will need to navigate these legal and ethical landscapes carefully, as your use of proprietary information or sensitive data could have significant consequences. Addressing data dependency issues is not just about utilizing a broad array of sources; it also involves understanding the implications of the data choices you make.

Scalability Considerations

Issues surrounding scalability can greatly affect your implementation of RAG in real-world applications. While RAG shows promise in generating sophisticated outputs, expanding its use across different environments can present challenges. As the size of the datasets increases, so does the need for advanced computational resources. You may encounter limitations in processing power that could hinder performance if not managed correctly. Systems must not only handle information retrieval effectively but must also scale up operations to accommodate heightened demand as your enterprise grows.

Additionally, the organizational structure may also play a vital role in scalability. You should consider the integration of RAG into your existing workflows and whether additional training or changes in team structures may be necessary. The balance between maintaining operational efficiency while adopting new technology is delicate, and failing to effectively plan for this transition could lead to disruptions in your productivity. Bear in mind, the successful implementation of RAG should not sacrifice system reliability or organizational clarity; both must be preserved as you scale.

This necessitates a careful evaluation of your current infrastructure and the technology stack employed. For large-scale applications of RAG, your organization may need to invest in cloud services or more robust server setups. By doing so, you ensure the RAG system can function seamlessly even as the volume of data increases, thereby reinforcing its value. Ultimately, while scalability presents its own set of challenges, your proactive approach can mitigate potential issues and allow RAG to thrive within your AI ecosystem.

Future Directions for RAG in AI Cognition

Your exploration of Retrieval-Augmented Generation (RAG) in AI cognition opens the door to an array of future possibilities that promise to reshape the landscape of artificial intelligence. As advancements in natural language processing and machine learning continue, RAG’s integration with other innovative technologies can cultivate more powerful cognitive frameworks that enhance user interaction and experience. This evolution fuels a new paradigm where AI systems efficiently retrieve and generate content tailored to your specific inquiries, making the exchange between humans and machines more seamless and effective. To gain deeper insights into RAG’s impact, take a look at What is Retrieval Augmented Generation(RAG) in 2024?.

Innovations on the Horizon

Innovations lie ahead as researchers and developers push the boundaries of RAG applications in AI cognition. Beyond enhancing conventional search functions, the next wave of RAG models is likely to incorporate advanced reasoning capabilities, allowing them to analyze information contextually and provide sophisticated outputs. This shift toward enriched reasoning empowers your AI tools to generate not only relevant but also highly insightful content, tailored specifically to the nuances of your requests. Tracking these advancements can substantively influence your approach to harnessing AI in various applications, from content creation to data analysis.

Moreover, as we look to the future, RAG’s role in enhancing multi-modal AI systems is poised to become more prominent. By combining text with visual, auditory, and other sensory modalities, RAG can curate richer interactions and experiences. For instance, you may find yourself interacting with an AI that can simultaneously analyze text while processing images, ultimately retrieving and generating more comprehensive insights than ever before. The potential for these multi-modal applications promises to deliver personalized experiences that resonate more profoundly with your needs and preferences.

Potential Ethical Implications

Along with the promising innovations in RAG, there lies a pressing need to address the potential ethical implications that these advancements entail. As AI systems become more adept at retrieving and generating content, the risk of misinformation and biased outputs becomes a legitimate concern. You should remain vigilant about how these technologies can inadvertently propagate stereotypes or misinformation, potentially impacting opinions and behaviors on a larger scale. Ensuring accountability and ethical considerations in the development and deployment of RAG models is imperative for fostering trust in AI technologies.

For instance, the automation of information retrieval and generation poses risks of amplifying harmful content or misinformation if the underlying datasets are flawed. This challenge necessitates stringent oversight in data selection and the establishment of guidelines that prioritize fairness and accuracy in outputs. Additionally, AI systems may disproportionately reflect the biases present in their training data, which can adversely affect decision-making in fields such as hiring, law enforcement, and healthcare. Engaging in meaningful dialogue regarding these ethical considerations empowers you to navigate the evolving landscape of AI cognition more responsibly.

Conclusion

As a reminder, the advent of Retrieval-Augmented Generation (RAG) represents a significant shift in how artificial intelligence engages with information and synthesizes cognition. In your daily encounters with AI technologies, you may have noticed an increasing ability for these systems to provide not just answers but nuanced, contextually-rich responses. RAG enhances this capability by merging the strengths of generative models with extensive informational databases. As you interact with AI, you can witness firsthand how this paradigm shift allows for more dynamic conversations and tailored solutions, offering you a richer user experience. With RAG, AI systems can access a broader array of information and seamlessly integrate this knowledge into their responses, significantly expanding their cognitive abilities.

This transformation in AI functionality allows for more precise and context-aware communication, positioning RAG as a linchpin in the future of artificial intelligence. For you, this means that tasks which once required extensive manual intervention can now be augmented by AI that intelligently retrieves pertinent information. Whether you are searching for technical guidance, creative inspiration, or even personalized recommendations, RAG can deliver responses that feel more instinctual and aligned with your needs. By marrying retrieval capabilities with robust generative models, RAG not only saves you time and effort but also empowers you to engage with AI as a collaborative partner rather than a mere tool.

Ultimately, the revolution ushered in by RAG could fundamentally redefine the interface between humans and machines in cognition. It offers a glimpse into a future where AI seamlessly incorporates real-time information into its operations, paving the way for more interactive and intuitive systems. As you consider the implications of this technology, remember that RAG’s potential reaches far beyond simple data processing; it heralds a new era in which AI engages with your queries, context, and preferences in profoundly meaningful ways. By embracing RAG, you are not just witnessing a technological evolution; you are participating in a transformation that rethinks what it means to interact with intelligent systems.

FAQ

Q: What is RAG and how does it differ from traditional AI models?

A: RAG, or Retrieval-Augmented Generation, is an advanced AI framework that combines retrieval-based methods with generative models. Unlike traditional AI models that generate responses solely based on pre-trained knowledge, RAG leverages external information by retrieving relevant documents or data points from a knowledge base. This integration allows RAG to produce more accurate and contextually relevant responses, enhancing the overall quality of AI cognition.

Q: How does RAG improve the accuracy of AI-generated responses?

A: By incorporating a retrieval component, RAG accesses real-time, up-to-date information from various sources. This allows it to provide answers that are not only derived from its training data but also augmented with current knowledge and factual accuracy. The retrieval mechanism ensures that the responses are grounded in reliable data, reducing the chances of generating outdated or incorrect information.

Q: In what applications can RAG be utilized to enhance AI performance?

A: RAG can be effectively employed in diverse applications such as customer support, knowledge management, content creation, and research assistance. For instance, in customer support, RAG can retrieve relevant articles or documentation to provide precise answers to user inquiries. In research, it can gather up-to-date information and present it in a coherent manner, making it a valuable tool for professionals who require current data and insights.

Q: What role does the retrieval component play in RAG’s operation?

A: The retrieval component in RAG serves as a bridge between existing knowledge and real-time data. During the response generation process, RAG first identifies relevant documents or snippets related to the query. This retrieved information then informs and guides the generated response, allowing the AI to craft replies that are more informed and nuanced than what a generative model could produce on its own.

Q: What are some challenges associated with implementing RAG in AI systems?

A: While RAG offers significant advantages, there are challenges in its implementation. Ensuring the quality and reliability of the retrieved data is paramount; poor-quality sources can lead to inaccurate responses. Additionally, the integration of retrieval and generation components requires sophisticated architecture and tuning to optimize performance. Moreover, managing the latency between retrieval and response generation can impact user experience, necessitating careful system design to balance speed with accuracy.

Comments